Overview

Round I & Round II: User interviews. We ran two rounds of user interviews:

one round to evaluate if our initially proposed and redesigned saving concept would solve our users’ needs to keep record of their media plans in Reach Planner >>.

another round to get feedback on our redesigned concept.

Part III: User Acceptance Testing (UAT) with user interviews. Final round of testing in the form of UAT surveys to gather feedback over the course of 2 weeks with a short debrief interview to get feedback on prolonged use prior to launch.

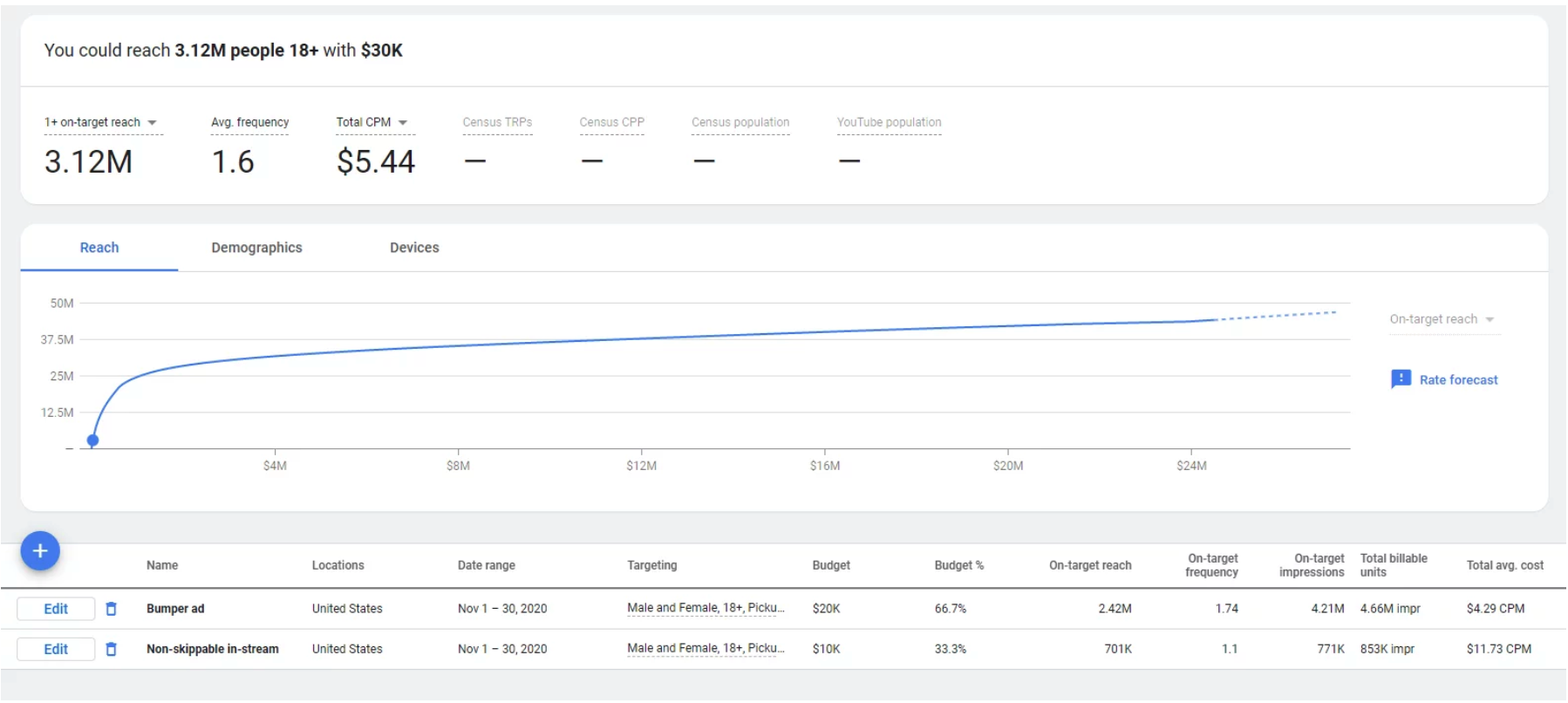

Google Ads Reach Planner

Saving Concept Evaluative Research

Google Ads Reach Planner example output >>

Goal:

To understand user reactions, and comprehension of the proposed saving solution. Gather feedback on designs and determine if and what updates are required prior to launch.

Role:

Lead UXR and project manager; collaborating, and getting input from Engineering, Product Management, UX Design, and Sales stakeholders during study planning and user interviews.

Worked together to generate insights and recommendations that were tailored to our engineering and design constraints.

Give best recommendation on “go” or “no-go” prior to launching long-wished-for feature.

Methods for Round I & Round II:

60-minute, 1:1 remote user interviews with a diverse group of users.

There was one round of design iterating between round 1 and round 2 of testing.

Methods for User Acceptance Testing (UAT):

Phase 1: Administered an intake survey after initial session of using the saving feature with real, account specific plan data (as opposed to mock data).

Phase 2: Follow-up survey after one week of use gathering complaints and issues if any.

Phase 3: Last survey administered after two weeks of use. Invite to follow up debrief to get more context to survey responses.

Compared survey responses from initial session vs one week vs two weeks of usage to observe eany change in sentiments.

Results:

Phase 1: Users were excited about the possibility to save their plans and expressed that the tool would help them in the many times a plan is not finalized in one session.

However, upon loading a saved plan, the UI did not clearly communicate the state of the plan output: updated, “refreshed” data vs outdated, “stale” data.

Phase 2: Users felt the updated concept did a good job of indicating that the plan required an action or manual “refresh” for them to see up-to-date data.

Users still wanted to see the difference between what they saved vs the “refreshed plan output” highlighted.

Phase 3: There was no indication from users to pause launching and most felt it would increase productivity by saving saving them time of recreating inputs or externally saving them for reference.

Challenges:

Pushing back on a long-anticipated release was difficult, however the potential lack of transparency was expected to hurt user trust.

Working to understand the technical and design limitations of the tool required many follow-up discussions with stakeholders from engineering and design, but ultimately helped in generating actionable recommendations.

Impact:

This research allowed us to delay, iteratee, and ultimately launch an important feature that may have contributed to increased users’ satisfaction as indicated by an increased CSAT score the following quarter.

Users cited time savings, increased organization, and easy collaboration as main benefits of the save feature.